Deep learning is getting lots of attention lately and for good reason. it's making a big impact in areas such as computer vision and natural language processing. in this guide, we'll help you understand why it has become so popular and address three key concepts. what is deep learning? how is it used in the real world? and how you can get started?

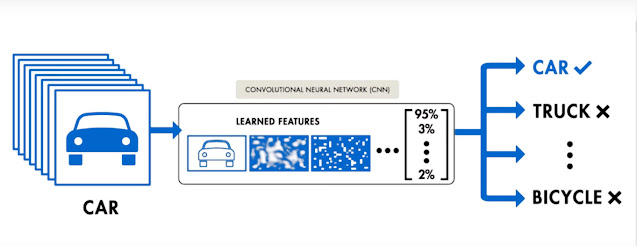

So, what is deep learning? deep learning is a machine learning technique that learns features and tasks directly from data. Data can be images, text or sound. In this guide, I will be using images but these concepts can be used for other types of data. Deep learning is often referred to as end to end learning so let's take a look at an example. say I have a set of images and I want to recognize which category of objects each image belongs to cars, trucks or boats.

I start with a label set of images or training data. the labels correspond to the desired outputs of the task. the deep learning algorithm needs these labels as they tell the algorithm about the specific features and objects in the image. the deep learning algorithm then learns how to classify input images into the desired categories. we use the term end to end learning because the task is learned directly from data.

another example is a robot learning. how to control the movement of its arm to pick up a specific object. in this case the task being learned is how to pick up an object given an input image. many of these techniques used in deep learning today have been around for decades, for example deep learning has been used to recognize handwritten postal codes in the mail service since the 1990s. the use of deep learning has surged over the last five years primarily due to three factors: first, deep learning methods are now more accurate than people at classifying images, second GPUs enable us should now train deep networks and less time and finally large amounts of label data required for deep learning has become accessible over the last few years. most deep learning methods use neural network architectures this is why you often hear deep learning models referred to as deep neural networks one popular type of deep neural network is known as a convolutional neural network or CNN.

A CNN is especially well-suited for working with image data. the term deep usually refers to the number of hidden layers in the neural network, while traditional neural networks only contain two or three hidden layers, solve the recent deep networks have as many as 150 layers so now I think you understand these key deep learning concepts here are a few examples recognizing or classifying objects into categories where a deep Network Classifieds objects on desk detecting or locating objects of interest in an image. another example where we use deep learning to detect a stop sign in an image.

Now Let us learn What is PyTorch and Deep Learning with PyTorch.

PyTorch is a deep learning framework in scientific computing package. the scientific computing aspect of PyTorch is primarily a result of PyTorches tensor library and associated tensor operations.

a tensor is an N dimensional array or in D array one of the most popular scientific computing packages for python is Numpy.

Numpy is the go-to package for indie arrays. PyTorches tensor library mirrors NumPy's in dimensional array capabilities very closely and in addition is highly interoperable with Numpy itself with PyTorch sensors, GPU support is a built-in. it's a very easy with PyTorch to move tensors to and from a GPU if we have one installed on our system. For now just know that PyTorch sensors and their associated operations are very similar to Numpy in dimensional arrays before we dive any deeper into the PyTorch feature set, let's take a walk down memory lane and look at a brief history of PyTorch. a brief history here is a must because PyTorch is a relatively young framework. the initial release of PyTorch was in October of 2016 and before PyTorch was created there was and still is another framework called torch.

torch is a machine learning framework that's been around for quite a while and is based on the Lua programming language. the connection between PyTorch and this Lua version called torch exists because many of the developers who maintain the Lua version are indeed that individuals who created PyTorch. notably Soumith - Soumith Chintala is credited with bootstrapping the PyTorch project and his reason for creating PyTorch is pretty simple. the Lua version of torch was aging and so a newer version written in Python was needed as a result PyTorch came to be.

He quotes, “when I first started, I was maintaining a framework called torch. It was based out of Lua which is this language that obscure but it like there's a big deep learning framework called torch that that used it and it had a curse like model and it worked pretty well except that Lua as an ecosystem is fairly small. also torches design has existed from like 2009-2010 and you know how as a field as we move in terms of research the tooling has to move with you, otherwise like tooling becomes irrelevant. it's not flexible enough for what researchers want today so torch design was aging and I was like I need a new tool and tentacle came like a year before that and we tried it and it just wasn't cutting it for us likely we couldn't debug day to day things and it was like for us like in a very personal opinion it was painful to use so then we were like okay. why don't we just build something that's torch based but in Python, that has a new design from all the lessons we learn from the last few years and that's pretty much how it started.”

we just go to insights and then contributors that shows us the contributors by number of commits. This is the best way to get an idea for who is working on a project.

all right let's check out the deep learning specific features of PyTorch now, programming neural networks and deep learning definitely requires the use of tensors but if we want a rich framework, we need more than tensors. PyTorch gives us just that this table gives us a list of PyTorch packages in their corresponding descriptions.

all right let's check out the deep learning specific features of PyTorch now, programming neural networks and deep learning definitely requires the use of tensors but if we want a rich framework, we need more than tensors. PyTorch gives us just that this table gives us a list of PyTorch packages in their corresponding descriptions.

These are the primary PyTorch components, we'll be learning about and using as we build neural networks in this guide. the torch package is the top-level package that contains all other packages as well as the tensor library. the next two packages, torch.nn and torch.Autograd are the primary workhorse packages of PyTorch. Torch.nn here stands for neural network contains classes and modules like layers, weights and Ford functions. Torch.nn is where neural networks are built so we'll spend a lot of time working directly with torch.nn. torch.auto grad is a sub package that handles the derivative calculations needed to optimize our neural network weights at their core. All deep learning frameworks have two features; a tensor library and a package for computing derivatives and for PyTorch these two are torch and torch.autograd for the typical deep learning functions and optimization algorithms we have torch.nn.functional and torch.optim, torch.nn.functional is the functional interface that gives us access to functions like loss functions, activation functions and convolution operations and torch.optim gives us access to typical optimization algorithms like SGD and atom. Torch.utils is a sub package that contains utility classes like data sets and data loaders that make data pre-processing much easier. finally we have torchvision which is a separate package that provides us access to popular data sets, model architectures and image transformations for computer vision.

let's talk about the prospects of jumping in and learning PyTorch for beginners to deep learning and real networks the top reason for learning PyTorch is that it is a thin framework that stays out of the way when we build neural networks with PyTorch we are super close to programming neural networks from scratch. the experience of programming in PyTorch is as close as it gets to the real thing. After understanding the process of programming neural networks of PyTorch it's pretty easy to see how the process works from scratch say in pure Python for example this is why PyTorch is great for beginners so to answer the question what would become of you the answer is that you'll have a much deeper understanding of neural networks and the deep learning process after using PyTorch. one of the top philosophies of PyTorch is to stay out of the way and this makes it so that we can focus more on neural networks unless on the actual framework.

The Philosophy of PyTorch

· stay out of the way of the user, don't overburden them with like abstractions or like complicated API procedures· cater to the impatient things to be always be interactive quick, no compilation time.

· promote a linear and interactive code flow

· be interoperating with the Python ecosystem as naturally as possible.

· be as fast as any other package that provides the same features.

these philosophies contribute greatly to PyTorches modern pythonic and thin design. when we create neural networks in PyTorch we are just writing and extending standard Python classes and when we debug PyTorch code we are using the standard Python debugger.

Debugging

- PyTorch is a Python extension.in the deep learning framework space, it is not obvious that if you use some framework that just has a Python API that you could use the Python debugger for example because like a lot of these frameworks providers black boxes where you create your model but then when you run it it's run in some kind of C++ runtime so you can't actually you know set Python breakpoints and see what's going on and print things and so on.

- Use your favorite Python debugger.

you can use your favorite Python debugger. you can use PyCharm, you can use PDB, you can use print for example and it's kind of as smooth as debugging other parts of your Python code.

so PyTorch is a good framework for learning about neural networks mainly because of its simplicity and this simplicity is also the characteristic that strengthens Pytorches longevity as a framework. Another common characteristic that is often associated with PyTorch is that it is the preferred framework for research. The reason for this research suitability has to do with a technical design consideration. to optimize neural networks we need to calculate derivatives and to do this computationally deep learning frameworks use what are called computational graphs. computational graphs are used to graph the function operations that occur on tensors inside neural networks. These graphs are often used to compute the derivatives needed to optimize the neural networks weights. PyTorch uses a computational graph that is called a dynamic computational graph. this means that the graph is generated on the fly as the operations occur. this is in contrast to static graphs that are fully determined before the actual operations occur. it just so happens that many of the cutting edge research topics in deep learning are requiring or benefiting greatly from dynamic graphs. we are ready now to get PyTorch installed.

NEXT STEP:

Comments

Post a Comment